What FTC Sticks Are Telling Adtech

Companies operating in the US have long contended with the Federal Trade Commission as their principal privacy regulator and effective policy maker, especially given the inability of the US Congress to agree on a comprehensive privacy law. The CPPA in California has begun to step up their activity over the last two years, becoming an ‘FTC West’ for close industry watchers.

Adtech companies, with their scaled data collection across 3rd party sites, often feel the hot gaze of privacy regulators around the world, and in the US, they now have twin authorities pushing the policy envelope, with perhaps more on the way.

Tracking regulatory activity in the Adtech space can sometimes feel disjointed, with a myopic focus on the particulars of each emerging case. In this piece, we’ll focus on recent enforcement activities in the US and attempt to extract the main areas of focus across these cases to evaluate higher level policy priorities.

The privacy cops can be cheap with the carrots, but they have plenty of sticks, and as many nails to drive their points home.

Collectively, what are they trying to tell us?

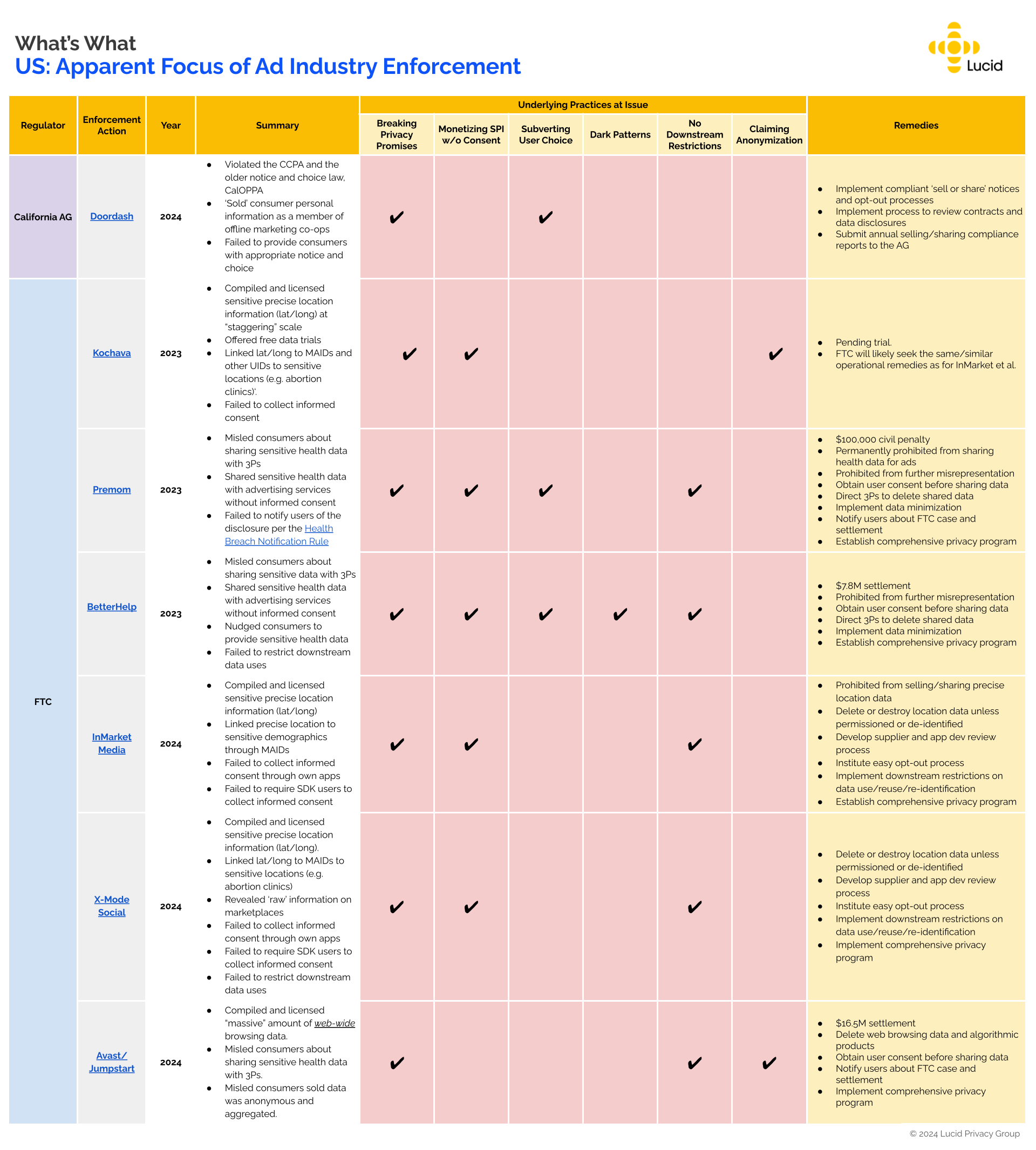

Over the last few years, the regulators have announced enforcement action in eight Adtech related cases. In some of these, the case was not targeting an Adtech company directly, but implicated ad technology that the target was using, often to market their own products and services. Each of these undertakings warrant their own fulsome legal analysis, but we’ll address them each in turn here with an eye on the big picture.

First, let’s look at the patterns.

- Premom, a fertility tracking app.

- Regulator: FTC

- Allegations:

- Misled consumers about sharing sensitive health data with 3Ps.

- Shared sensitive health data with advertising services without informed consent

- Failed to notify users of the disclosure per the Health Breach Notification Rule.

- Underlying practices at issue:

- Breaking privacy promises

- Monetizing Sensitive Personal Information without consent

- Subverting user choice

- No downstream restrictions

- BetterHelp, an online mental health service.

- Regulator: FTC

- Allegations:

- Misled consumers about sharing sensitive data with 3Ps.

- Shared sensitive health data with advertising services without informed consent.

- Nudged consumers to provide sensitive health data

- Failed to restrict downstream data uses

- Underlying practices at issue:

- Breaking privacy promises

- Monetizing Sensitive Personal Information without consent

- Subverting user choice

- Dark patterns

- No downstream restrictions

- Kochava, a mobile data aggregator and marketplace.

- Regulator: FTC

- Allegations:

- Compiled and licensed sensitive precise location information (lat/long) at “staggering” scale

- Offered free data trials

- Linked lat/long to MAIDs and other UIDs to sensitive locations (e.g. abortion clinics)‘.

- Failed to collect informed consent

- Underlying practices at issue:

- Breaking privacy promises

- Monetizing Sensitive Personal Information without consent

- Claiming anonymization

- InMarket Media, mobile data aggregator and SDK provider.

- Regulator: FTC

- Allegations:

- Compiled and licensed sensitive precise location information (lat/long)

- Linked precise location to sensitive demographics through MAIDs

- Failed to collect informed consent through own apps

- Failed to require SDK users to collect informed consent

- Underlying practices at issue:

- Breaking privacy promises

- Monetizing Sensitive Personal Information without consent

- No downstream restrictions

- X-Mode Social, mobile data aggregator and SDK provider.

- Regulator: FTC

- Allegations:

- Compiled and licensed sensitive precise location information (lat/long).

- Linked lat/long to MAIDs to sensitive locations (e.g. abortion clinics)

- Revealed ‘raw’ information on marketplaces.

- Failed to collect informed consent through own apps

- Failed to require SDK users to collect informed consent

- Failed to restrict downstream data uses

- Underlying practices at issue:

- Breaking privacy promises

- Monetizing Sensitive Personal Information without consent

- No downstream restrictions

- Avast/Jumpstart, antivirus and antimalware software provider.

- Regulator: FTC

- Allegations:

- Compiled and licensed “massive” amounts of web-wide browsing data.

- Misled consumers about sharing sensitive health data with 3Ps.

- Misled consumers sold data was anonymous and aggregated.

- Underlying practices at issue:

- Breaking privacy promises

- No downstream restrictions

- Claiming anonymization

- DoorDash, popular food delivery app.

- Regulator: California Attorney General

- Allegations:

- Violated the CCPA and the older notice and choice law, CalOPPA.

- ‘Sold’ consumer personal information as a member of offline marketing co-ops.

- Failed to provide consumers with appropriate notice and choice

- Underlying practices at issue:

- Breaking privacy promises

- Subverting user choice

Extrapolating the underlying concerns

Taken together, we can draw a few conclusions about what practices regulators are taking real issue with.

- Truth in policy. Every one of these cases includes some reference to privacy promises made by the company, and the company’s failure to live up to these promises.

Unlike in the EU, where regulators tend to focus more on the substance of compliance, US regulators, and the FTC in particular, still rely on the legal hook of a privacy policy detailing data flows and rights, and the failure of a company to honor their own policy.

Remember that your privacy policy is effectively a contract that you are signing with the regulator, and not just the user. Make sure that your commitments remain current and truthful over time. - Prior informed consent. Every one of the FTC cases but one (Avast/Jumpstart), include allegations of monetizing sensitive personal information without consent. (See #3 below.)

When we assess regulatory trends together, this is the strongest form of signal. The FTC clearly has prioritized coming after companies that are using information they view as sensitive. Health and precise location data are at the top of the list for the data categories the FTC is actively regulating. The FTC is also increasingly looking to a strict definition of consent, which often feels impractical to the market.

A requirement for consent to a GDPR-style high bar (under the CCPA Regulations, too) can be a prohibition by another name. - Post-Dobbs data ‘sensitivity’. Diving a layer deeper into what the FTC means by ‘sensitive’ personal data, the agency is pushing definitional boundaries and the implications are profound.

We used to live in a world where sensitive personal data was tightly defined and companies operated with much greater certainty. Of course, companies proceeded to take advantage of the rigid definitions and engage in highly effective marketing using demographic inferences and all manner of related location, interest and behavioral signals that re-create the same sensitive marketing categories.

In response, the FTC is throwing open sensitive personal information as a term, effectively saying ‘it’s sensitive if you are using it to market into sensitive categories.’ The two most critical departures here are ‘browsing data’ (common web history information, which can include all manner of data used for sensitive inferences), and health condition inferences, which is clearly much broader than prescription information or diagnoses.

Both of these definitional expansions warrant their own deep dives, but suffice it to say that the FTC has turned a very narrow term upside down. - User agency and choice. Another common theme with both the FTC and California AG is businesses subverting genuine user choice.

Here, regulators are scrutinizing specific language, especially at the point where users are making decisions with their data. Registration forms, opt-ins, and other locations where people are viewing data usage disclosures in the context of beginning a relationship or sharing specific data with the company have to be fulsome and accurate.

To reinforce the points already raised above, your public language is forming a legal (and social) contract, and the contract is subject to regulatory review and enforcement. - Contractual followthrough. The second most common theme featured across the cases is a lack of restrictions on downstream data recipients, in licensing or other such agreements.

The FTC is very focused on companies that collect vast quantities of data and whether those companies prevent the data from being mis-used by partners and clients. In a typical scenario, the target company collects data from millions of consumers, but agrees to prevent utilizing the data in any manner that might be considered ‘sensitive.’ They might even live up to those promises.

But if they then turn that data over to downstream partners and clients without technical and/or legal restrictions, the target company may be handing data over to other companies to engage in marketing without requisite permissions, or worse – to generate sensitive inferences for invasive targeting or engage in prohibited activities.

Here we have perhaps the most important catalyst for enforcement. The FTC and the other Tigers at the Gate will not stand for companies facilitating privacy harms while shrugging their shoulders, or playing Jedi mind games.

Other invitations for deeper scrutiny

The FTC does not appear shy in using its fairness and deception authority to look for symptoms of deeper misconduct. In particular:

- Manipulative UX ‘dark patterns’, which appear to be a ready lens through which you can view the other themes, especially subverting user choice.

- Claims of anonymization, while not central to the reviewed cases, may add to the legal woes of companies otherwise engaging in practices the FTC is targeting.speak to the agency’s efforts to reform the archaic ‘PII’-isms of the U.S. legislative paradigm. Simply put, Lina Khan’s FTC is tech-savvy, and just tired of companies using ‘anonymization’ in sloppy ways with consumers, and in some cases exploiting the term to their advantage.

How did we do with our mapping of US regulatory themes for Adtech? Did we miss one?

Where is all of this going in the next 18 months, especially as the CPPA ramps up?