AI Regulation: US, UK and other approaches

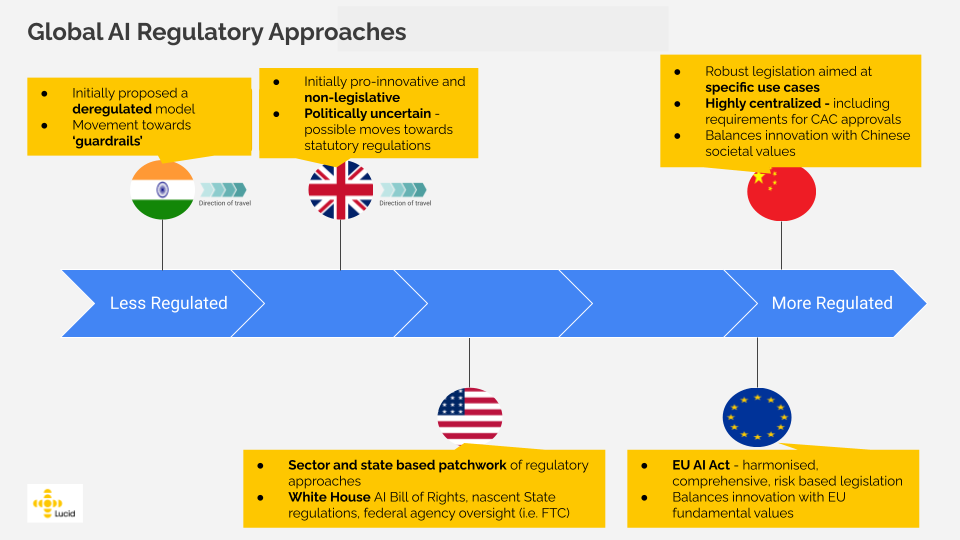

We continue our four-part deep dive into the future of AI regulation, by examining some contrasting regulatory approaches that are rapidly developing in other global jurisdictions, including the UK, the US, China and India.

In Part 1 of this series, we explored the developing European approach to AI regulation, as enshrined in the proposed EU AI Act, which seeks to establish a comprehensive set of rules for the development, deployment, and use of AI systems in the EU, with a particular focus on high-risk AI applications.

We continue our four-part deep dive into the future of AI regulation, by examining some contrasting regulatory approaches that are rapidly developing in other global jurisdictions, including the UK, the US, China and India.

The UK Approach

AI regulation in the UK context is overtly political. Currently rocked by successive political scandals (Liz Truss, Boris Johnson), impending elections that will be difficult for the current government, and continued attempts to manifest elusive Brexit-dividends, AI regulation in the UK is best seen through the lens of AI Diplomacy - a tool for domestic and international realpolitik. Up until very recently the UK was able to wield substantial power by virtue of its membership in the European Union - and politics in the aftermath of Brexit is strongly coloured by the UK’s need to prove that it can make its way in the world alone, as promised.

In line with its post-Brexit data strategy, the UK is continuing to diverge from EU regulatory approaches with its March 2023 white-paper, ‘A pro-innovation approach to AI regulation’, the title of which is particularly informative. In contrast with the EU AI Act, the UK is proposing a non-statutory framework, meaning that there will be no new legislation governing AI, but rather future statutory requirements on domain-regulators (ICO, FCA, CMA etc.) to ensure conformity with overarching principles to govern the use of AI. Whilst it is likely that the UK is taking a political approach here, a number of legal academics do advocate such a ‘soft-law’ approach to AI regulation, arguing that traditional ‘hard-law’ legislative approaches are not appropriate due to the pace of technological development - the ‘pacing problem’.

Despite this early pro-innovation stance, including government calls to “win the race” to develop AI technologies and backed by its own white paper, in May 2023 Downing Street began to signal a possible change in approach. Following a meeting between UK PM Sunak and senior executives in the AI industry, the UK government for the first time acknowledged “existential risks” related to AI development. This was followed by cross party calls for new legislation to create the UK’s first AI-focused watchdog.

The UK’s position has seemingly now been turned on its head - rather than a hub for AI innovation, Sunak seeks to position the UK as the global center of AI regulation, using his time with President Biden during a June visit to push for the formation of an international regulatory body, located in the UK. Once again, it is hard not to view these developments as overtly political.

The UK has seen an exodus of European international bodies (such as the European Medicines Agency) that were once headquartered in the UK, and calls for a UK based international AI governance organization are a clear attempt to build on the UK’s somewhat beleaguered soft-power capabilities. The regulatory body would function similarly to the International Atomic Energy Agency, attempting to harmonize global standards for AI governance. The UK will play host to the world’s first global AI Summit later in the year, where such plans will be discussed with global leaders from ‘like-minded’ countries (the clear and important subtext here being that Russia and China may not get an invite).

The UK is an excellent case study of the intersectional nature of public policy and politics. There may yet ultimately be UK legislation to regulate AI (the government no longer rules this out), but how the UK eventually coalesces around an approach will likely to continue to be dominated by political factors and is currently uncertain, especially given impending elections.

The US Approach

Similarly to the privacy domain, the US does not yet have concrete plans for comprehensive federal legislation on AI, instead having a patchwork of various current and proposed AI regulatory frameworks. Several entities are taking steps to address these questions and implement guidelines for the responsible development and use of AI.

The White House has published a non-binding, principles based AI Bill of Rights, which seeks to provide overarching guidance on how AI should be developed and deployed, and a number of federal bodies have begun work to embed the bill of rights into their use of AI systems.

In 2022, several states, including New York, Illinois, and Maryland, introduced laws regulating automated employment decision tools (AEDTs) that use AI to screen candidates or make employment decisions. New York's law requires AEDTs to undergo an annual bias audit, with results made public. The Equal Opportunity Employment Commission (EEOC) also launched an initiative on algorithmic fairness in employment, issuing guidance on the use of AI tools in hiring to avoid unintentional discrimination against people with disabilities.

The EEOC provided a technical assistance document for companies to comply with Americans with Disabilities Act regulations when using AI tools in hiring, while reminding companies that they remain accountable for the hiring decisions made by AI systems. This state focus on AI issues as they relate to employment aligns with the high risk designation of such activities in the EU AI Act, again underscoring the importance of the HR function in AI compliance efforts.

There are a number of other state legislations currently under consideration that we may see develop throughout 2023/24: :

- California S.B. 313, creating an Office of Artificial Intelligence to govern AI use in state organizations.

- California AB No. 331, requiring AI impact assessments in some cases.

- Colorado Algorithm and Predictive Model Governance Regulation

- Connecticut Senate Bill No. 1103, establishing an Office of Artificial Intelligence.

- DC’s Stop Discrimination by Algorithms Act of 2023 (reintroduced)

- Texas HB 2060, creating an AI advisory council.

The FTC has also released a set of guidelines for businesses that use or plan to use AI, with a focus on promoting transparency and accountability, noting that existing FTC and other federal agencies’ (CFPB, DOJ, EEOC, FCC) remits do already extend to AI systems. The guidelines recommend that companies provide clear explanations of how their AI models work, and ensure that their models are fair, accurate, and free from bias. The FTC has also indicated that it will use its enforcement powers to take action against companies that engage in unfair or deceptive practices related to AI.

To note, OpenAI is already subject to such an FTC investigation, which goes beyond issues relating to false claims and deceptive practices, focusing also on personal data processing, possible provision of inaccurate responses and the “risks of harm to consumers, including reputational harm” within the ChatGPT system. This FTC investigation is the clearest example yet of the US approach to regulation - federal agency oversight is likely to play a leading role.

Several state data privacy laws may impact the development and use of AI. The CCPA, CPA, VCDPA, UDPA and CTCPA require businesses to disclose what personal information they collect and how it is used, and give consumers the right to opt out of certain uses of their data, including rights related to automated decision making. CPRA’s regulations covering automated decision-making technologies (ADMT) are still pending, and we can expect borrowing from the EU frameworks and perhaps NIST (it is worth noting of course that the National Institute of Standards and Technology (NIST) have released an AI Risk Management Framework, a voluntary standards based framework for AI governance).

Unless ongoing calls for federal legislation are successful, it is likely that the US will continue with its mosaic approach, blending state legislation with federal agency oversight, in much the same way as we experience with the US approach to privacy regulation. The EU AI Act (and the GDPR) are harmonized, simple and comprehensive, but are also monolithic and not sector-sensitive. By contrast, and again analogous to privacy regulation, the US approach to AI regulation sacrifices simplicity and completeness for sector and state sensitivity and nuance.

The Chinese Approach

China is regulating AI in a robust and targeted manner. Currently, three major legislations relating to AI regulation are, or are soon to be, in force: 2021 rules on recommendation algorithms, 2022 rules on synthetically generated content, and the 2023 draft rules on generative AI. The speed at which China is able to regulate specific issues relating to AI is impressive - draft rules relating to generative AI were published in April this year in response to the generative AI explosion we saw at the tail end of 2022.

We expect China to continue to develop agile legislation to address new AI risk contexts as they emerge. Similar to developments in the EU, China appears to be attempting to strike a balance between economic innovation and safeguarding of societal values. The societal values to be safeguarded are of course of a different nature to those protected in Europe, and include an alignment of AI outcomes with core socialist values, social morality, and public order.

In practice however, a number of the proposed safeguards align quite closely with those in the proposed EU AI Act - prevention of discrimination, transparency of information, information property rights, data privacy etc. Interestingly the latest proposed measures seem to require users to provide real-world identities before using generative AI systems and (analogous to some of the privacy processes embedded in the PIPL) there are requirements for organizations to submit assessment documentation to the CAC for approval. Like the PIPL, Chinese AI regulation is therefore more centralized (critics may use the term authoritarian) than European regulation, even if the technical and operational implications are broadly similar.

The Indian Approach

India initially positioned itself firmly in the deregulated camp, with the Indian Ministry of Electronics and IT confirming in April that the country does not intend to regulate AI. Similar to the trajectory of the UK, India has however begun to shift its perspective towards a more balanced approach, with the Union IT Minister more recently confirming in June that India will act to keep its citizens safe from the dangers of AI.

India provides a useful example of a developing country hoping to use the burgeoning opportunities of AI technology (that India is very well placed to benefit from) as part of the country’s economic development transition. We see here echoes of the ‘just-transition’ debate in the climate change conversation - less economically developed countries may have more of an incentive towards deregulation in order to promote innovation and growth, whilst such local deregulation has impacts felt globally.

It is likely that we will soon get a first glimpse at how India envisages AI regulation, with the imminent publication of the first draft of the Digital India Bill in the coming months. The Minister for IT has already confirmed that the bill will contain AI ‘guardrails’, noting the ambitious scope of the legislation: ‘“We (India) are signaling to the world as the largest connected nation we are shaping the guardrails and through a process of consultation and engagement, trying to create a framework that other countries can follow”.

Conclusion

We have seen from our examples in this article, and from our previous discussion of the EU AI Act, that the development of AI regulation intersects with domestic politics, international geopolitics, diplomacy, culture and issues to do with global development. Furthermore, if the worst of the possible risks related to AI development turn out to be true, AI development will be a truly international issue, with existential risks that cross borders and transcend nation-state actors.

Both factors mean that the global development of AI regulation is perhaps more akin to ongoing attempts to address the climate-crisis or previous international efforts to regulate and control the development of nuclear arms, rather than following the trajectory of privacy (or other domain specific) regulatory development. That said, AI regulation and privacy regulation are still similar in many regards. Our more mature understanding of how privacy regulation is operationalised can directly inform the operationalisation of new AI regulation.

Stay tuned for Part 3 in this series that will be examining exactly this.